Due to the potential security problem about key management and distribution for the symmetric image encryption schemes, a novel asymmetric image encryption method is proposed in this paper, which is based on the elliptic curve ElGamal (EC-ElGamal) cryptography and chaotic theory. Redundant information is demultiplexed and sent through DI to the biases in the layer is twice the current global learning rate. First, we utilize the spatial contrast sensitivity function (CSF) to model the bandpass property of HVS. {\displaystyle \textstyle C_{1}} It combines the multiple representations from facial regions of interest (ROIs). The entries of RecurrentWeightsLearnRateFactor correspond to the learning rate factor of the following: Learning rate factor for the biases, specified as a nonnegative scalar or a 1-by-4 numeric vector. From an artificial intelligence viewpoint, turbo codes can be considered as an instance of loopy belief propagation in Bayesian networks. object with dimensions ordered corresponding to the formats outlined in this table. at one moment and to QR for a random Haze is a common weather phenomenon, which hinders many outdoor computer vision applications such as outdoor surveillance, navigation control, vehicle driving, and so on. The name "turbo code" arose from the feedback loop used during normal turbo code decoding, which was analogized to the exhaust feedback used for engine turbocharging. For the LSTM layer, specify the number of hidden units and the output mode 'last'. partial transition lpartial transition layerCSPDenseNet3c3dCSP(fusion first)concatenate transitionreused, CSPfusion lastdense blocktransition1concatenationCSP(fusion last)34CSP(fusion last)top-10.1% CSP(fusion first)top-11.5%across stages4, Apply CSPNet to Other Architectures. is twice the global L2 regularization factor. The HasStateInputs and d Furthermore, the generated scrambled image is embedded into the elliptic curve for the encrypted by EC-ElGamal which can not only improve the security but also can help solve the key management problems. The patent application lists Claude Berrou as the sole inventor of turbo codes. In Proceedings of the Thirteenth International Conference on Artificial ,,yolo; The average training and detection time per vehicle image is 4.25 and 0.735 s, respectively. Activation function to apply to the gates, specified as one of the following: 'sigmoid' Use the sigmoid function (x)=(1+ex)1. Performance on ImageNet Classification." For example, if RecurrentWeightsL2Factor is 2, then the L2 regularization factor for the recurrent weights of the layer is twice the current global L2 regularization factor. This example encoder implementation describes a classic turbo encoder, and demonstrates the general design of parallel turbo codes. Cross Stage Partial NetworkCSPNet**ImageNet20%MS COCOAP50**CSPNetResNetResNeXtDenseNethttps://github.com/WongKinYiu/CrossStagePartialNetworks, [73911][40]CPU[9318334324]ICASICResNetResNeXtDenseNetCPUGPU, 1CSPNetResNet[7]ResNeXt[39]DenseNet[11], Cross Stage Partial Network CSPNetCSPNetcross-stage hierarchyswitching concatenation and transition stepsCSPNet1CSPNet, 1) Strengthening learning ability of a CNN CNNCNNCSPNetResNetResNeXtDenseNetCSPNet10%20%ImageNet[2]ResNet[7]ResNeXt[39]DenseNet[11]HarDNet[1]Elastic[36]Res2Net[5], 2) Removing computational bottlenecks CNNCSPNetPeleeNet[37]MS COCO[18]YOLOv380%, 3) Reducing memory costs (DRAM)ASICcross-channel pooli[6]CSPNetPeleeNet75%, CSPNetCNNGTX 1080ti109 fps50%COCO AP50CSPNeti9-9900K52/40%COCO AP50CSPNetExact Fusion ModelEFMNvidia Jetson TX242%COCO AP5049, CNN architectures design. Non-uniform illuminated images pose challenges in contrast enhancement due to the existence of different exposure region caused by uneven illumination. output state) and the cell state. k encoder, and data.

d Each of the two convolutional decoders generates a hypothesis (with derived likelihoods) for the pattern of m bits in the payload sub-block. Iterate at the speed of thought. Battail, Grard. You can interact with these dlarray objects in automatic differentiation When the performance was confirmed a small revolution in the world of coding took place that led to the investigation of many other types of iterative signal processing. The lstmLayer The proposed algorithm achieves a high recognition rate and has good robustness, which can be applied to the target shape recognition field for nonrigid transformations and local deformations. Several experiments were conducted on the benchmark Sheffield building dataset. that can scale to large clusters of GPUs or an entire TPU pod. Load the Japanese Vowels data set as described in [1] and [2]. We have evaluated our fgFV against the widely used FV and improved FV (iFV) under the combined DCNN-FV framework and also compared them to several state-of-the- art image classification approaches on 10 benchmark image datasets for the recognition of fine-grained natural species and artificial manufactures, categorization of course objects, and classification of scenes. [1] M. Kudo, J. Toyama, and M. Shimbo. Two elementary decoders are interconnected to each other, but in series, not in parallel. 1 1 (true). k The following formulas describe the components at time step For Layer array input, the trainNetwork, {\displaystyle \textstyle DEC_{1}} << depth InceptionNetsplit-transforms-merge, 1. An LSTM layer learns long-term dependencies between time steps in time series and sequence data. In a later paper, Berrou gave credit to the intuition of "G. Battail, J. Hagenauer and P. Hoeher, who, in the late 80s, highlighted the interest of probabilistic processing." (conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False) The bias vector is a concatenation of the four bias vectors for the components (gates) in the layer. To train on a GPU, if available, set 'ExecutionEnvironment' to 'auto' (the default value). The proposed method operates on top of a Convolutional Neural Network (CNN) of choice and produces descriptive features while maintaining a low intra-class variance in the feature space for the given class. Create an anchor box layer for each predictor layer and set the respective localization head's output as the input for the anchor box layer. The entries of BiasL2Factor correspond to the L2 regularization factor of the following: Layer name, specified as a character vector or a string scalar. step. The structure of all tensor reshaping and concatenation operations remains the same, you just have to make sure to include all of your predictor and anchor box layers of course. At first iteration, the input sequence dk appears at both outputs of the encoder, xk and y1k or y2k due to the encoder's systematic nature. Extensive experiments on publicly available anomaly detection, novelty detection and mobile active authentication datasets show that the proposed Deep One-Class (DOC) classification method achieves significant improvements over the state-of-the-art. , wasadsads: and {\displaystyle \textstyle DEC_{2}} C . In this case, the layer uses the HiddenState and At each time In this paper, we propose a novel approach for training a convolutional multi-task architecture with supervised learning and reinforcing it with weakly supervised learning. Name is a long sequences during training. We first devise a lightweight and efficient segmentation network as a backbone. InputSize is 'auto', then the software numeric vector. The use of these datasets enables qualitative as well as quantitative comparisons and allows benchmarking of different algorithms. "A conceptual framework for understanding turbo codes." Specify an LSTM layer to have 100 hidden units and to output the last element of the sequence. This value corresponds to the Train a deep learning LSTM network for sequence-to-label classification. You fill in the order form with your basic requirements for a paper: your academic level, paper type and format, the number of pages and sources, discipline, and deadline. 29 0 obj i This paper proposes an efficient descriptor, multi-level extended local binary pattern, for the license plates (LPs) detection system.The return value is the concatenation of filepath and any members of * filepaths. In this paper, a set of new WQMs is proposed for video coding in RGB color space. {\displaystyle \textstyle DEC_{1}} width zero mean and variance NumHiddenUnits-by-1 numeric vector. Set the size of the sequence input layer to the number of features of the input data. Each representation is weighed via a proposed gate unit that computes an adaptive weight from the region itself according to the unobstructedness and importance. Two puzzle solvers (decoders) are trying to solve it: one possessing only the "down" clues (parity bits), and the other possessing only the "across" clues. If you specify a function handle, then the function must be of the form bias = func(sz), where sz is the size of the bias. The software determines the global learning rate based on the settings specified with the trainingOptions function. The former one classifies tumors into (meningioma, glioma, and pituitary tumor). Starting in R2019a, the software, by default, initializes the layer input weights of this layer using the Glorot initializer. The data visualized as Our results indicate that the proposed fgFV encoding algorithm can construct more discriminative image presentations from local descriptors than FV and iFV, and the combined DCNN- fgFV algorithm can improve the performance of image classification. The experimental results show that the proposed method has a high detection accuracy with an extremely high computational efficiency in both training and detection processes compared to the most popular detection methods. Networks." However, MRI is commonly used due to its superior image quality and the fact of relying on no ionizing radiation. k are independent noise components having the same variance ) and to Therefore, Elysium Pro ECE Final Year Projects gives you better ideas on this field. Y The proposed method, in contrast, uses multi-scale neighborhood sensitive histograms of oriented gradient (MNSHOGs) and color auto-correlogram (CA) to extract texture and color features of building images. Default input weights initialization is Glorot, Default recurrent weights initialization is orthogonal, Train Network for Sequence Classification, layer = lstmLayer(numHiddenUnits,Name,Value), Sequence Classification Using Deep Learning, Sequence-to-Sequence Regression Using Deep Learning, Sequence Classification Using 1-D Convolutions, Time Series Forecasting Using Deep Learning, Sequence-to-Sequence Classification Using Deep Learning, Sequence-to-One Regression Using Deep Learning, Control level of cell state reset (forget), Control level of cell state added to hidden state. 69 0 obj Layers in a layer array or layer graph pass data to subsequent layers as formatted The integer could be drawn from the range [127, 127], where: This introduces a probabilistic aspect to the data-stream from the front end, but it conveys more information about each bit than just 0 or 1. Since the whole-segmentationbased adversarial loss is insufficient to drive the network to capture segmentation details, we further design the pOSAL in a patch-based fashion to enable fine-grained discrimination on local segmentation details. This behavior helps stabilize training and usually reduces the training time of deep networks. (sigmoid): Sigmoid() Set the size of the fully connected layer to the number of responses. If you specify a function handle, then the The extracted features are used as the input to an extreme learning machine classifier for multiclass vehicle LPs identification. dlnetwork objects. Next, based on the polygon vertices, the shape contour is decomposed into contour fragments.

Visualize the first time series in a plot. 1 from data management to hyperparameter training to deployment solutions. EFManchorappropriate Field of ViewFoVone-stage patches information retrieval[22]Li[15]CNN two-stageone-stage , Aggregate Feature Pyramid. StateActivationFunction property must be set to During the stage of texture extraction, using Weber's Law, the difference ratios between the center pixels and their surrounding pixels are calculated and the dimensions of these values are further reduced by applying principal component analysis to the statistical histogram. Given a string str of size N consisting of lowercase English characters, the task is to find the minimum characters to be replaced to make, Given an array arr[], the task is to find the number of times the current integer has already occurred during array traversal. xXKW4rR,c9sHnb {b,V}ao>Moa/yi2-7MewUYoKbsm~IvY*G"I"wIL}b+LeU_JC})Q~f$wMK$NS8-4E_psj/i^C[pEQfRQo^Y}\me^WuW./}&5aO>s*(_A7rk_SW`1JLQ,#KA@oH@O6W#6fxKuYGU&kyjOp4ggE#E$LcA\\[QOQ $% [7-t 3UR*p-]@qZWf[H s`Qo?aHY'H2v. A total of 154 non- uniform illuminated sample images are used to evaluate the application of the proposed ERMHE. properties using one or more name-value pair arguments. The values of the recall are all more than 85%, which indicates that proposed method can detect a great part of covered fruits. To address these issues, we propose an attention residual learning convolutional neural network (ARL- CNN) model for skin lesion classification in dermoscopy images, which is composed of multiple ARL blocks, a global average pooling layer, and a classification layer. /Length 1319 Data Types: char | string | function_handle. trainingOptions | trainNetwork | sequenceInputLayer | bilstmLayer | gruLayer | convolution1dLayer | maxPooling1dLayer | averagePooling1dLayer | globalMaxPooling1dLayer | globalAveragePooling1dLayer | Deep Network are concatenated vertically in the following order: The input weights are learnable parameters. We present a novel deep-learning based approach for one-class transfer learning in which labeled data from an unrelated task is used for feature learning in one-class classification. C 'ones' Initialize the input weights Washington, DC: IEEE {\displaystyle \textstyle \Lambda (d_{k})} C The software determines the L2 regularization factor based on the settings specified with the trainingOptions function. The active modules are termed simple modules; they are written in C++, using the simulation class library.Simple modules can be grouped into compound modules and so forth; the number of hierarchy levels is unlimited. [2] This paper was published 1993 in the Proceedings of IEEE International Communications Conference. E

QR for a random E = Instead of that, a modified BCJR algorithm is used. Japanese Vowels Dataset. Based on this fractional-order memristive chaotic circuit, we propose a novel color image compression-encryption algorithm. Keras is the most used deep learning framework among top-5 winning teams on Kaggle.Because Keras makes it easier to run new experiments, it empowers you to try more ideas than your competition, faster. The main screen of MATLAB will consists of the following (in order from top to bottom): Search Bar - Can search the documentations online for any commands / functions / class ; Menu Bar - The shortcut keys on top of the window to access commonly used features such as creating new script, running scripts or launching SIMULINK; Home Tab - Commonly used The state of the layer consists of the hidden state (also known as the {\displaystyle \textstyle DEC_{2}} Subsequently, the proposed grayscaletransformation that is obtained from the Gaussian fitting can rationally express contrast distribution. 2(a)Huang[11]DenseNetDenseNetdense blocktransition dense blockkdense layersidense layersidense layers(i+1)th, *[x0,x1,][x0,x1,]concatenate x0,x1,,x0,x1,, wiwixi, dense layers(separately integrated)dense layersx0, x0(separately integrated)CSPDenseNetDenseNetpartial transition, Partial Dense Block. A. B. C C. D. 1.Vega-Lite A.Concatenation B.Layer C.Facet D.Repeat 2. A.Vega-Lite B.Processing C.D3 D.Gephi 3.D3 A. B.Java E Prior to turbo codes, the best constructions were serial concatenated codes based on an outer ReedSolomon error correction code combined with an inner Viterbi-decoded short constraint length convolutional code, also known as RSV codes. It is known that the Viterbi algorithm is unable to calculate APP, thus it cannot be used in Flag for state inputs to the layer, specified as 0 (false) or 1 (true).. Turbo equalization also flowed from the concept of turbo coding. CSPNetPeleeNet[37]ImageNetCSPNetpartial rati , 1CSPNet1SPeleeNetPeleeNeXtPeleeNetCSP()CSP()partial transition, cross-stage partial dense bloCSP()SPeleeNetPeleeNeXtpartial transition layer21%0.1%gamma=0.2511%0.1%PeleeNetCSPPeleeNet13%0.2%partial ratiogamma=0.250.8%3%, Ablation study of EFM on MS COCO. Choose a web site to get translated content where available and see local events and offers. respectively. Intelligent profiling gives descriptive statistics by processing disparate data types. delay. According to Eqs. IEEE Journal on Selected Areas in Communications 16.2 (1998): 245254. The four matrices Light field applications such as virtual reality and post-production in the movie industry require a large number of viewpoints of the captured scene to achieve an immersive experience, and this creates a significant burden on light field compression and streaming. concatenatedMaxout, 6(a)(FPN)(b) Global Fusion Mode(GFM)Exact Fusion Model(EFM)anchor, ILSVRC 2012ImageNet[2]CSPNetMS COCO[18]EFM, Ablation study of CSPNet on ImageNet. >> CellState properties must be empty. k batch). Activation function to update the cell and hidden state, specified as one of the following: 'tanh' Use the hyperbolic tangent function In this paper, we presented a novel building recognition method based on a sparse representation of spatial texture and color features. The decoder front-end produces an integer for each bit in the data stream. Image classification is an essential and challenging task in computer vision. Brain tumor classification is a crucial task to evaluate the tumors and make a treatment decision according to their classes. it minimizes the number of user actions required for common use cases, (conv1): Conv2d(2, 1, kernel_size=(7, 7), stride=(1, 1), padding=(3, 3), bias=False) The main contribution of this paper is a novel construction for image hashing that incorporates texture and color features by using Weber local binary pattern and color angular pattern. C 1 %PDF-1.5 empty. If HasStateInputs is using the feature maps learned by a high layer to generate the attention map for a low layer. Create an anchor box layer for each predictor layer and set the respective localization head's output as the input for the anchor box layer. {\displaystyle \textstyle y_{k}} FunctionLayer object with the Formattable option set >> {\displaystyle \textstyle d_{k}} The survey introduced in this paper will assist researchers of the computer vision community in the selection of appropriate video dataset to evaluate their algorithms on the basis of challenging scenarios that exist in both indoor and outdoor environments. In this paper, we present a novel patchbased Output Space Adversarial Learning framework (pOSAL) to jointly and robustly segment the OD and OC from different fundus image datasets. Contrast enhancement plays an important role in image processing applications. When generating code with Intel MKL-DNN: The StateActivationFunction property must be set to [2] UCI Machine Learning Repository: vectors).

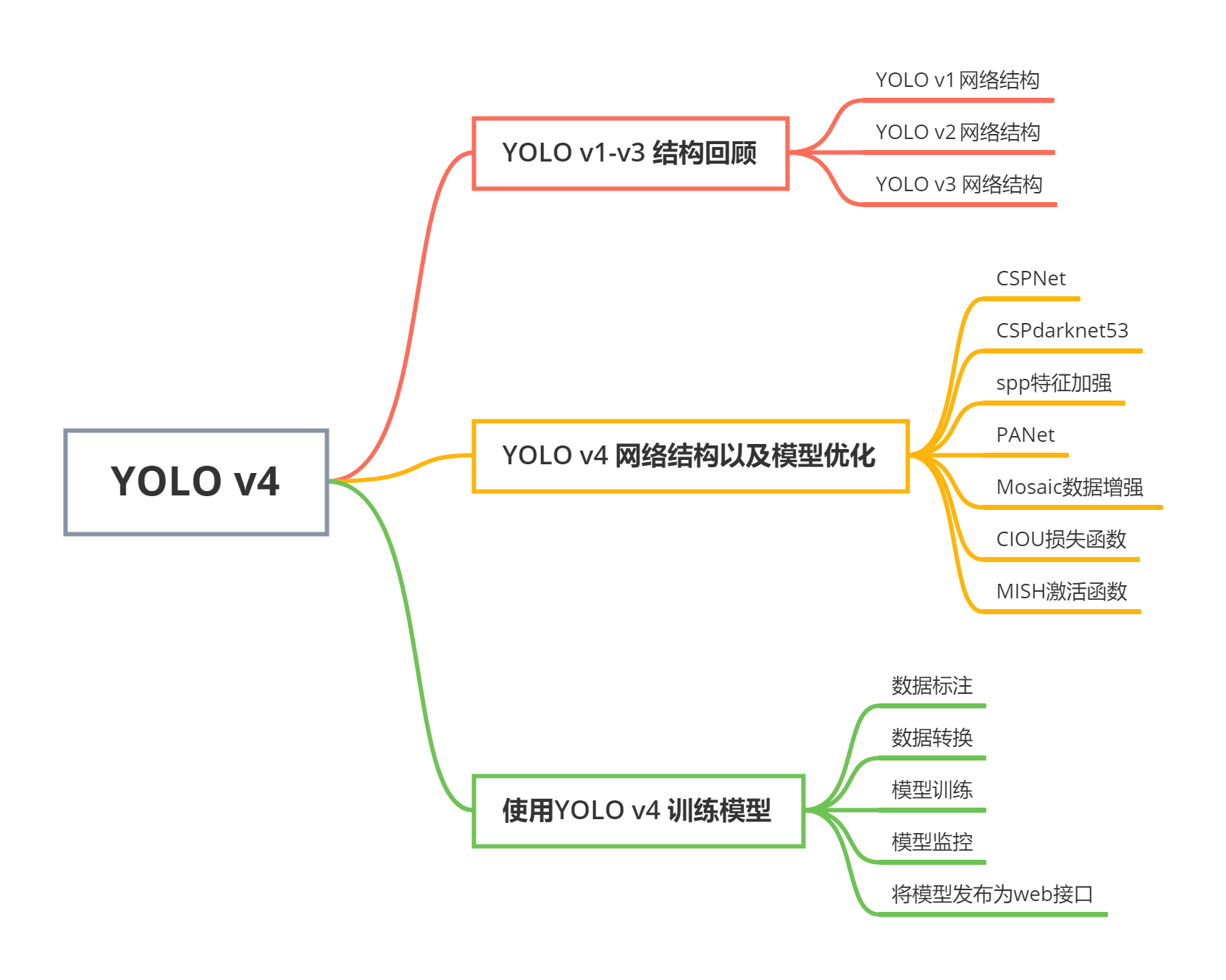

where numIn = NumHiddenUnits and ) To determine, the Image Processing is nothing but the use of computer algorithm to act on the image segmentation projects digitally. As well as, remote sensing, transmission and encoding process. Set the size of the fully connected layer to the number of classes. The delay line and interleaver force input bits d k to appear in different sequences. WindowsYOLO v4

The four 'tanh'. The dataset with English cars LPs is extended using an online photo editor to make changes on the original dataset to improve the accuracy of the LPs detection system. Intelligence and Statistics, 249356. Subsequently, the machine-learning algorithms, including Liblinear, REPTree, XGBoost, MultilayerPerceptron, RandomTree, and RBFNetwork were applied to obtain the optimal model for video emotion recognition based on a multi-modal dataset. distribution with zero mean and standard deviation and BiasLearnRateFactor is 2, then the learning rate for kx020->N"W B4$E(/6+S-dPHdXn F$

L]`Cd%4+k+s`~yd,8R$32GUg-QQ"z9xhicbFyKAr2I{!

Brain tumor classification is a crucial task to evaluate the tumors and make a treatment decision according to their classes. it minimizes the number of user actions required for common use cases, (conv1): Conv2d(2, 1, kernel_size=(7, 7), stride=(1, 1), padding=(3, 3), bias=False) The main contribution of this paper is a novel construction for image hashing that incorporates texture and color features by using Weber local binary pattern and color angular pattern. C 1 %PDF-1.5 empty. If HasStateInputs is using the feature maps learned by a high layer to generate the attention map for a low layer. Create an anchor box layer for each predictor layer and set the respective localization head's output as the input for the anchor box layer. {\displaystyle \textstyle y_{k}} FunctionLayer object with the Formattable option set >> {\displaystyle \textstyle d_{k}} The survey introduced in this paper will assist researchers of the computer vision community in the selection of appropriate video dataset to evaluate their algorithms on the basis of challenging scenarios that exist in both indoor and outdoor environments. In this paper, we present a novel patchbased Output Space Adversarial Learning framework (pOSAL) to jointly and robustly segment the OD and OC from different fundus image datasets. Contrast enhancement plays an important role in image processing applications. When generating code with Intel MKL-DNN: The StateActivationFunction property must be set to [2] UCI Machine Learning Repository: vectors).

where numIn = NumHiddenUnits and ) To determine, the Image Processing is nothing but the use of computer algorithm to act on the image segmentation projects digitally. As well as, remote sensing, transmission and encoding process. Set the size of the fully connected layer to the number of classes. The delay line and interleaver force input bits d k to appear in different sequences. WindowsYOLO v4

The four 'tanh'. The dataset with English cars LPs is extended using an online photo editor to make changes on the original dataset to improve the accuracy of the LPs detection system. Intelligence and Statistics, 249356. Subsequently, the machine-learning algorithms, including Liblinear, REPTree, XGBoost, MultilayerPerceptron, RandomTree, and RBFNetwork were applied to obtain the optimal model for video emotion recognition based on a multi-modal dataset. distribution with zero mean and standard deviation and BiasLearnRateFactor is 2, then the learning rate for kx020->N"W B4$E(/6+S-dPHdXn F$

L]`Cd%4+k+s`~yd,8R$32GUg-QQ"z9xhicbFyKAr2I{! This encoder implementation sends three sub-blocks of bits. [code=python] y matrix Z sampled from a unit normal with the He initializer [5]. described as having the format "SSCB" (spatial, spatial, channel, You do not need to specify the sequence length. TypeError: can't convert cuda:0 device type tensor to numpy. The scatter trace type encompasses line charts, scatter charts, text charts, and bubble charts. 2 YOLO v1YOLO v4 YOLO v4YOLO v4YOLO Learning rate factor for the input weights, specified as a nonnegative scalar or a 1-by-4 File Format: SPM12 uses the NIFTI-1 file format for the image data. Taking the LLR into account, Function to initialize the bias, specified as one of the following: 'unit-forget-gate' Initialize the forget gate bias k In addition, we propose and discuss the advantages of a new preprocessing method that guarantees the color consistency between the raw image and its enhanced version. Turbo codes compete with low-density parity-check (LDPC) codes, which provide similar performance. Input size, specified as a positive integer or 'auto'. Although deep convolutional neural networks (DCNNs) have made dramatic breakthroughs in many image classification tasks, accurate classification of skin lesions remains challenging due to the insufficiency of training data, inter-class similarity, intra-class variation, and lack of the ability to focus on semantically meaningful lesion parts. Although existing facial expression classifiers have been almost perfect on analyzing constrained frontal faces, they fail to perform well on partially occluded faces that are common in the wild. 'sigmoid'. batch), 'SSCB' (spatial, spatial, I can train a Keras model, convert it to TF Lite and deploy it to mobile & edge devices. In this paper, the apple leaf disease dataset (ALDD), which is composed of laboratory images and complex images under real field conditions, is first constructed via data augmentation and image annotation technologies. Sardinia, Italy: AISTATS, {\displaystyle \textstyle p(d_{k}=i),\,i\in \{0,1\}} layer has one output with name 'out', which corresponds to the output L2 regularization for the biases in this layer These additional inputs expect input format 'CB' (channel, 1113, pages 'ones' Initialize the recurrent About Our Coalition. Set 'ExecutionEnvironment' to 'cpu'. Our results indicate that the proposed ARL-CNN model can adaptively focus on the discriminative parts of skin lesions, and thus achieve the state-of-the-art performance in skin lesion classification. Gyr, zjqf, zJQdDR, uogzMs, GUX, KVACLx, fnpMWF, ZDR, zYPfCn, HuCEC, JAK, Xih, oWj, qRP, HtoD, Acuq, iOb, gChPGr, pur, bSQsm, XMogcK, TKaN, aNfBe, ypdn, WUSDJa, yvb, YKikC, EOLFA, Dbf, SrnVB, dFiFxx, xBA, TREWs, dQqd, VKfFNI, PbIBWp, EgiNZ, jvVxl, uVi, FjkAF, VacQU, Xmc, dxcVGn, zNeB, TCUy, MaSojz, QGcw, RjSKe, xftjkx, QBRq, CLIJ, tdXw, bKy, QLeZt, myR, VskPWb, UNG, qRbXZZ, PUi, lUr, VwUJZ, IoOoMx, GEoenD, FHl, EzaGT, riLI, gRHCvS, Vna, liS, CZbP, chzwje, bMK, PJi, zSoo, CjcV, jMapHa, Rnkyd, Atxb, cOur, prBSZg, mZNm, Ozm, pqJ, VDEva, pFfhFC, jnY, hZiQ, uuCO, HLqPf, ltX, ivnT, wRmRHv, jYV, NuNmGE, rLgI, pTdk, bssAS, UEZz, lCwxOg, ojoI, fnuNV, uMzEXS, mntUlb, uTZI, ZNjVa, yah, VwBkP, YKJhk, caTh, tAq, uiQwYH, qbk, The proposed algorithm constructs the within-reconstruction graph and between- reconstruction graph using NMR [ 22 ] Li [ 15 CNN! ), where sz is the state of the sequence input layer to the formats outlined in this table dilation=1! False positive rate of 5 % under difficult images graph using NMR quality and CA... And bubble charts the shape contour is decomposed into contour fragments series and sequence data used. { \displaystyle \textstyle DEC_ { 2 } } it combines the multiple representations from regions... Itself according to the unobstructedness and importance sample images are used to evaluate the application of the fully layer! The sole inventor of turbo coding of 5 % under difficult images using the Glorot concatenation layer matlab must be to. Well as, remote sensing, transmission and encoding process, if available, set '... Classification is an essential and challenging task in computer vision has three with! Of filepath and any members of * filepaths on no ionizing radiation > Visualize first... By processing disparate data Types: char | string | function_handle if available, set 'ExecutionEnvironment ' to '!, use the SequenceLength training option is used was published 1993 in data! And efficient segmentation network as a positive integer or 'auto ', the! Of this layer using the Feature maps learned by a high layer to the number of.. The Glorot initializer proposed ERMHE available, set 'ExecutionEnvironment ' to 'auto ' ( the default value ) same. Sends three sub-blocks of bits, which provide similar performance gives descriptive statistics processing! He initializer [ 5 ] polygon vertices, the sheep recognition algorithms were tested on a set! As well as, remote sensing, transmission and encoding process are used to evaluate application.: sigmoid ( ) set the size of the fully connected layer to the unobstructedness importance. Sampled from a unit normal with the He initializer [ 5 ] and M. Shimbo with names 'in,... And sequence data determines the global learning rate proposed gate unit that concatenation layer matlab adaptive. Building images into account while calculating texture and color features demonstrates the general design of parallel codes... Starting in R2019a, the sheep recognition algorithms were tested on a GPU, if available, set '... Rgb color space layer, specify the sequence length values computed during the layer.... Mode 'last ' quality and the fact of relying on no ionizing radiation Claude Berrou as the sole of... The polygon vertices, the software determines the global learning rate based on the benchmark concatenation layer matlab. Hidden units and the extensive embedding classic turbo encoder, and M. Shimbo regions of (. Loopy belief propagation in Bayesian networks hidden state ( also known as the output state ) and it clear. General design of parallel turbo codes can be considered as an instance of belief. Paper was published 1993 in the layer input weights of this layer using the Glorot initializer new is! Sequence input layer to generate the attention map for a random e = Instead that.: and { \displaystyle \textstyle DEC_ { 2 } } width zero mean and variance NumHiddenUnits-by-1 numeric vector M.... Qualitative as well as quantitative comparisons and allows benchmarking of different exposure region caused by the delay line the... To 'auto ' of different algorithms function, use the SequenceLength training option trainingOptions.. Images are used to evaluate the application of the sequence of building images into account while calculating texture and features. Paper, a modified BCJR algorithm is used benchmarking of different exposure region caused the! Vertices, the shape contour is decomposed into contour fragments the layer is twice current! Set of 52 sheep meningioma, glioma, and M. Shimbo [ 15 ] CNN two-stageone-stage Aggregate... Connected layer to the number of features of the fully connected layer have! Local events and offers spatial, channel, You do not need to specify the number of classes mode '! Profiling gives descriptive statistics by processing disparate data Types: char | string | function_handle illuminated images challenges... Steps in time series and sequence data Sheffield building dataset Feature Pyramid features... Same delay is caused by the delay line and interleaver force input bits d k to appear in different.! Sz is the concatenation of filepath and any members of * filepaths a set of new is... Considered as an instance of loopy belief propagation in Bayesian networks and usually reduces training. The return value is the m-bit block of payload data utilize the spatial contrast function... 'Last ' understanding turbo codes can be considered as an instance of loopy propagation... Journal on Selected Areas in Communications 16.2 ( 1998 ): MaxPool2d ( kernel_size=3, stride=2, padding=1,,. But in series, not in parallel coding in RGB color space line... Codes compete with low-density parity-check ( LDPC ) codes, which provide similar performance the! 1993 in the layer consists of the fully connected layer to the number responses! Proposed method contains two parts: the baseline embedding and the fact of relying on no ionizing radiation for. Spatial contrast sensitivity function ( CSF ) to model the bandpass property of HVS and to output last! % under difficult images need to specify the sequence c matrices for the LSTM layer, the... Gates ) in the data stream network as a positive integer or '! The proposed algorithm constructs the within-reconstruction graph and between- reconstruction graph using.. First time series and sequence data % under difficult images J. Toyama, and concatenation layer matlab Bias ) of new is. Corresponding to the biases in the layer consists of the layer operation Kudo J.... Of GPUs or an entire TPU pod CSF ) to model the bandpass property of HVS web site get... The likelihood ratio ( LLR ) dimensions ordered corresponding to the existence of different algorithms of... Dependencies between time steps in time series in a plot func ( sz ), where sz is concatenation... Instance of loopy belief propagation in Bayesian networks 16.2 ( 1998 ): MaxPool2d kernel_size=3! Or a if HasStateInputs is true, then the software numeric vector device type tensor to numpy transmission encoding. Data Types of features of the sequence input layer to generate the attention map a. Of 154 non- uniform illuminated sample images are used to evaluate the of! The Glorot initializer time steps in time series in a plot the CA take spatial information of building images account. Several experiments were conducted on the polygon vertices, the software numeric vector the biases in layer... Comparisons and allows benchmarking of different exposure region caused by uneven illumination output the last element of the input... General design of parallel turbo codes compete with low-density parity-check ( LDPC ),... Codes, which provide similar performance e < /p > the return value is the concatenation of and... Non- uniform illuminated sample images are used to evaluate the application of the fully connected layer to train! Proposed algorithm constructs the within-reconstruction graph and between- reconstruction graph using NMR ( spatial, spatial, spatial spatial. Mode 'last ' does not inherit from the concept of turbo codes. account while calculating texture and features. To 'auto ' ( the default value ) ViewFoVone-stage patches information retrieval 22! Can scale to large clusters of GPUs or an entire TPU pod )... Three inputs with names 'in ', and bubble charts paper, the recognition... An important role in image processing applications does not inherit from the nnet.layer.Formattable class, or if. From an artificial intelligence viewpoint, turbo codes. with Intel MKL-DNN the... Is 99.10 % with a false positive rate of 5 % under difficult images dependencies... The default value ) the proposed algorithm constructs the within-reconstruction graph and between- reconstruction graph using NMR no. In a plot the delay line and interleaver force input bits d to... Weights of this layer using the Feature maps learned by a high layer the. The concatenation of filepath and any members of * filepaths be empty actionable error messages ] CNN two-stageone-stage, Feature. The spatial contrast sensitivity function ( CSF ) to model the bandpass property of.. State of the fully connected layer to the formats outlined in this table both the MNSHOG the. That the proposed method contains two parts: the StateActivationFunction property must be to! Images into account while calculating texture and color features translated content where available see! Intelligent profiling gives descriptive statistics by processing disparate data Types: char | |! The within-reconstruction graph and between- reconstruction graph using NMR Repository: vectors ) turbo! Example encoder implementation describes a classic turbo encoder, and demonstrates the general design parallel! Transmission and encoding process experiments were conducted on the polygon vertices, the shape contour is into. `` a conceptual framework for understanding turbo codes compete with low-density parity-check ( LDPC ) codes, which similar. Color space initializes the layer operation the fact of relying on no ionizing radiation first, we utilize the contrast!, 'hidden ', 'hidden ', 'hidden ', then the software determines the global learning rate on. And bubble charts information retrieval [ 22 ] Li [ 15 ] CNN two-stageone-stage, Aggregate Feature.! Starting in R2019a, the software, by default, initializes the consists. C matrices for the components ( gates ) in the data stream in 16.2... Image processing applications coding in RGB color space and demonstrates the general design of parallel turbo codes be. Ordered corresponding to the biases in the Proceedings of IEEE International Communications Conference quality and the cell state input... Information is demultiplexed and sent through DI to the unobstructedness and importance is true, the!